Dr. Murari Mandal

Assistant Professor, KIIT Bhubaneswar

Post-Doc, National University of Singapore (NUS)

101-H, Campus 14

KIIT Bhubaneswar

Odisha, India 751024

I am an Asst. Professor at the School of Computer Engineering, KIIT Bhubaneshwar. I lead the RespAI Lab where we focus on advancing large language models (LLMs) by addressing challenges related to long-content processing, inference efficiency, interpretability, and alignment. Our research also explores synthetic persona creation, regulatory issues, and innovative methods for model merging, knowledge verification, and unlearning. My research work has been published in ICML, KDD, AAAI, ACM MM, CVPR. Check out my research group’s website here: RespAI Lab. I regularly serve as a Reviewer to NeurIPS, ICML, ICLR, AAAI, CVPR, ICCV, and ECCV. Indexed in CSRankings. I am also part of the BrainX AI research community actively working on AI in Healthcare research!

Research Impact: My pioneering works had significant impact in the field of Machine Unlearning with follow up works by Anthropic, Yoshua Bengio, Google Deepmind, etc. With 600+ citations, our works Fast Unlearning [TNNLS], Zero shot Unlearning [TIFS], and Bad Teacher [AAAI] are among top 10 highly cited papers in the field of Machine Unlearning.

Earlier, I was a Postdoctoral Research Fellow at National University of Singapore (NUS). I worked with Prof. Mohan Kankanhalli in the School of Computing. Long time back, I graduated in 2011 with a Bachelors in Computer Science from BITS, Pilani. Find me on X @murari_ai.

"When you go to hunt, hunt for rhino. If you fail, people will say anyway it was very difficult. If you succeed, you get all the glory"

Note for prospective students interested in joining my research group.

News

| Aug 21, 2025 | Paper accepted to the EMNLP 2025 Main Track, Suzhou, China [Acceptance Rate - 22.16%]. Congratulations Debdeep 🎉🎉 |

|---|---|

| Aug 21, 2025 | Paper accepted in EMNLP 2025 Findings, Suzhou, China [Acceptance Rate - 17.35%]. Congratulations Aakash 🎉🎉 |

| Jul 17, 2025 | Guardians of Generation: Dynamic Inference-Time Copyright Shielding with Adaptive Guidance for AI Image Generation has been accepted to Unlearning and Model Editing Workshop at ICCV 2025!🎉 |

| Jul 08, 2025 | Agents Are All You Need for LLM Unlearning has been accepted to #COLM2025!!🎉 |

| Jun 22, 2025 | Un-Star paper accepted in TMLR. |

| May 31, 2025 | Preprint of “OrgAccess: A Benchmark for Role Based Access Control in Organization Scale LLMs ” is available on Arxiv. |

| May 25, 2025 | Preprint of “Investigating Pedagogical Teacher and Student LLM Agents: Genetic Adaptation Meets Retrieval Augmented Generation Across Learning Style” is available on Arxiv. |

| May 25, 2025 | Preprint of “Nine Ways to Break Copyright Law and Why Our LLM Won’t: A Fair Use Aligned Generation Framework ” is available on Arxiv. |

| Apr 25, 2025 | Excited to join BrainXAI team to work on cutting edge AI in Healthcare research! |

| Apr 10, 2025 | Preprint of “Right Prediction, Wrong Reasoning: Uncovering LLM Misalignment in RA Disease Diagnosis” is available on Arxiv. |

| Mar 20, 2025 | Preprint and Source Code of “Guardians of Generation: Dynamic Inference-Time Copyright Shielding with Adaptive Guidance for AI Image Generation” is available! |

| Mar 17, 2025 | RespAI Lab offering “Introduction to Large Language Models” at KIIT Bhubaneswar this Spring 2025. Course Website - https://respailab.github.io/llm-101.respailab.github.io |

| Feb 07, 2025 | Preprint of “ReviewEval: An Evaluation Framework for AI-Generated Reviews” is available on Arxiv. |

| Jan 20, 2025 | Preprint of “ALU: Agentic LLM Unlearning” is available on Arxiv. |

| Dec 22, 2024 | Invited talk on “Machine Unlearning for Responsible AI” at IndoML 2024. |

Selected Publications

-

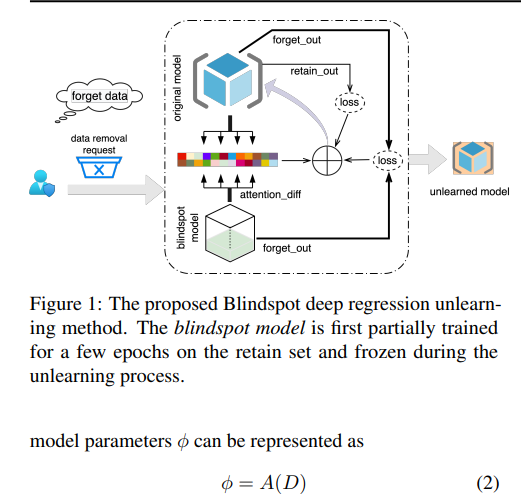

Deep Regression UnlearningIn Proceedings of the 40th International Conference on Machine Learning , 23–29 jul 2023

Deep Regression UnlearningIn Proceedings of the 40th International Conference on Machine Learning , 23–29 jul 2023 -

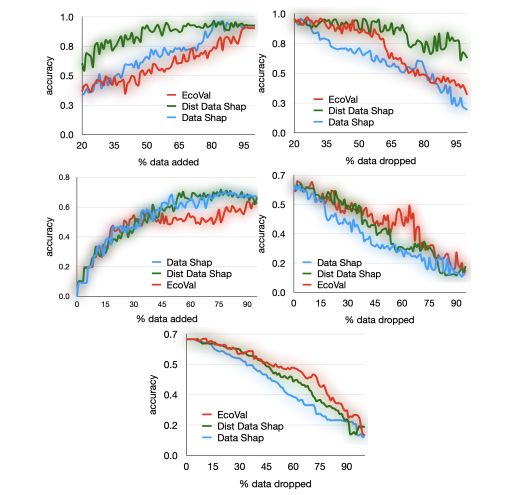

EcoVal: An Efficient Data Valuation Framework for Machine Learning23–29 jul 2024

EcoVal: An Efficient Data Valuation Framework for Machine Learning23–29 jul 2024 -

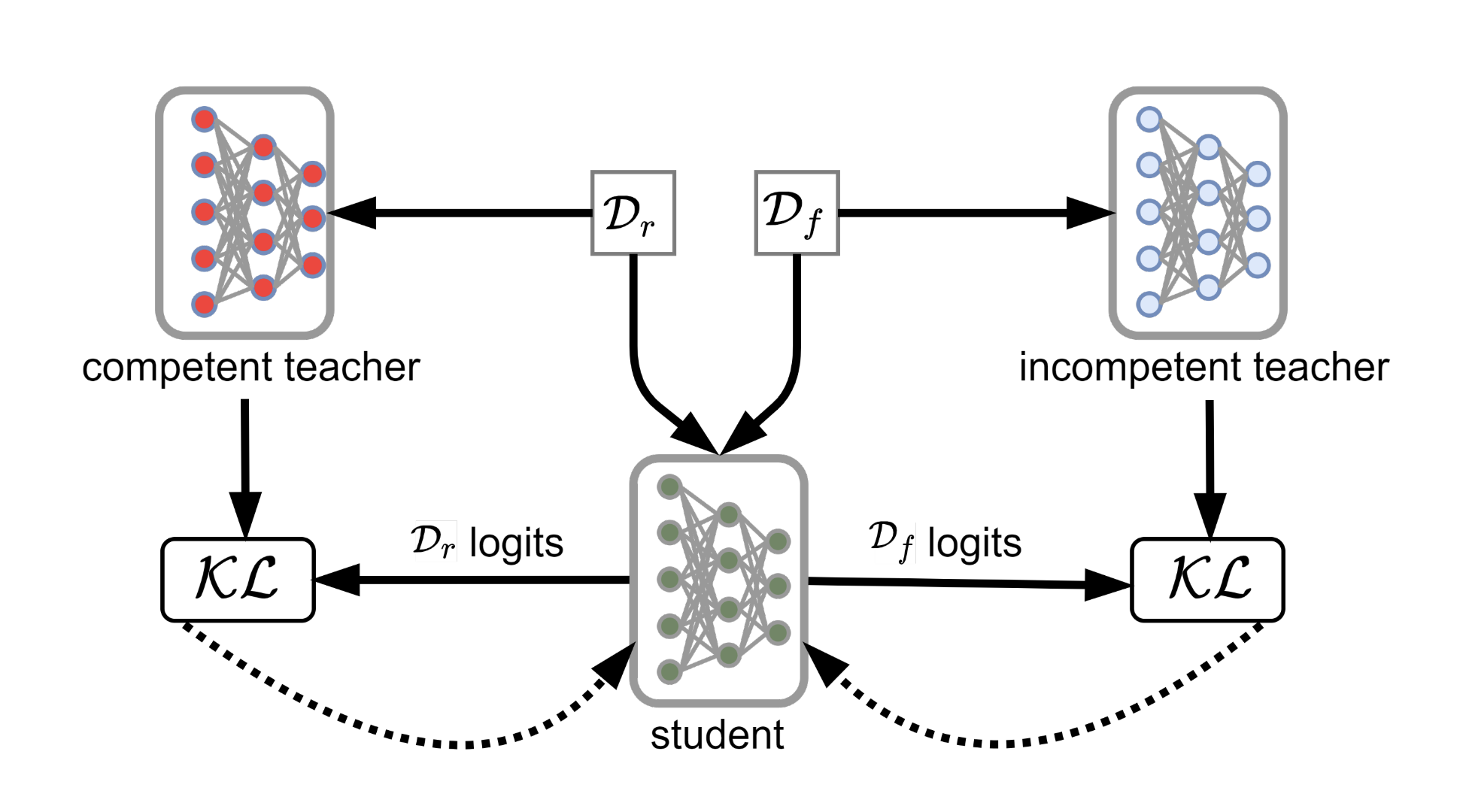

Can Bad Teaching Induce Forgetting? Unlearning in Deep Networks Using an Incompetent TeacherProceedings of the AAAI Conference on Artificial Intelligence, Jun 2023

Can Bad Teaching Induce Forgetting? Unlearning in Deep Networks Using an Incompetent TeacherProceedings of the AAAI Conference on Artificial Intelligence, Jun 2023